|

Open CASCADE Technology

6.7.0

|

|

|

Open CASCADE Technology

6.7.0

|

|

This document provides overview and practical guidelines for work with OCCT automatic testing system. Reading this section Introduction should be sufficient for OCCT developers to use the test system to control non-regression of the modifications they implement in OCCT. Other sections provide more in-depth description of the test system, required for modifying the tests and adding new test cases.

OCCT automatic testing system is organized around DRAW Test Harness DRAW Test Harness, a console application based on Tcl (a scripting language) interpreter extended by OCCT-related commands.

Standard OCCT tests are included with OCCT sources and are located in subdirectory tests of the OCCT root folder. Other test folders can be included in the scope of the test system, e.g. for testing applications based on OCCT.

Logically the tests are organized in three levels:

Some tests involve data files (typically CAD models) which are located separately and are not included with OCCT code. The archive with publicly available test data files should be downloaded and installed independently on OCCT code from dev.opencascade.org.

Each modification made in OCCT code must be checked for non-regression by running the whole set of tests. The developer who does the modification is responsible for running and ensuring non-regression on the tests that are available to him. Note that many tests are based on data files that are confidential and thus available only at OPEN CASCADE. Thus official certification testing of the changes before integration to master branch of official OCCT Git repository (and finally to the official release) is performed by OPEN CASCADE in any case.

Each new non-trivial modification (improvement, bug fix, new feature) in OCCT should be accompanied by a relevant test case suitable for verifying that modification. This test case is to be added by developer who provides the modification. If a modification affects result of some test case(s), either the modification should be corrected (if it causes regression) or affected test cases should be updated to account for the modification.

The modifications made in the OCCT code and related test scripts should be included in the same integration to master branch.

Before running tests, make sure to define environment variable CSF_TestDataPath pointing to the directory containing test data files. (The publicly available data files can be downloaded from http://dev.opencascade.org separately from OCCT code.) The recommended way for that is adding a file DrawInitAppli in the directory which is current at the moment of starting DRAWEXE (normally it is $CASROOT). This file is evaluated automatically at the DRAW start. Example:

All tests are run from DRAW command prompt, thus first run draw.tcl or draw.sh to start DRAW.

To run all tests, type command testgrid followed by path to the new directory where results will be saved. It is recommended that this directory should be new or empty; use option –overwrite to allow writing logs in existing non-empty directory.

Example:

If empty string is given as log directory name, the name will be generated automatically using current date and time, prefixed by results_. Example:

For running only some group or a grid of tests, give additional arguments indicating group and (if needed) grid. Example:

As the tests progress, the result of each test case is reported. At the end of the log summary of test cases is output, including list of detected regressions and improvements, if any. Example:

The tests are considered as non-regressive if only OK, BAD (i.e. known problem), and SKIPPED (i.e. not executed, e.g. because of lack of data file) statuses are reported. See Grid’s cases.list file chapter for details.

The detailed logs of running tests are saved in the specified directory and its sub-directories. Cumulative HTML report summary.html provides links to reports on each test case. An additional report TESTS-summary.xml is output in JUnit-style XML format that can be used for integration with Jenkins or other continuous integration system. Type help testgrid in DRAW prompt to get help on additional options supported by testgrid command.

To run single test, type command test’ followed by names of group, grid, and test case.

Example:

Note that normally intermediate output of the script is not shown. To see intermediate commands and their output, type command decho on before running the test case. (Type ‘decho off’ to disable echoing when not needed.) The detailed log of the test can also be obtained after the test execution by command dlog get.

Standard OCCT tests are located in subdirectory tests of the OCCT root folder ($CASROOT). Additional test folders can be added to the test system by defining environment variable CSF_TestScriptsPath. This should be list of paths separated by semicolons (*;*) on Windows or colons (*:*) on Linux or Mac. Upon DRAW launch, path to tests sub-folder of OCCT is added at the end of this variable automatically. Each test folder is expected to contain:

Each group directory contains:

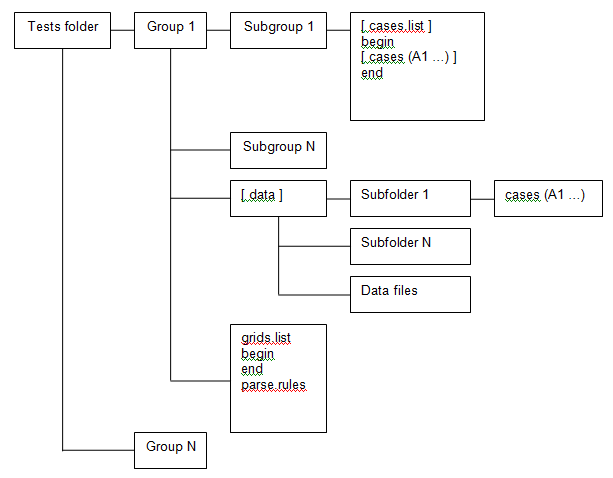

By convention, names of test groups, grids, and cases should contain no spaces and be lowercase. Names begin, end, data, parse.rules, grids.list, cases.list are reserved. General layout of test scripts is shown on Figure 1.

Figure 1. Layout of tests folder

Test folder usually contains several directories representing test groups (Group 1, Group N). Each directory contains test grids for certain OCCT functionality. The name of directory corresponds to this functionality. Example:

caf mesh offset

The test group must contain file grids.list file which defines ordered list of grids in this group in the following format:

Example:

The file begin is a Tcl script. It is executed before every test in current group. Usually it loads necessary Draw commands, sets common parameters and defines additional Tcl functions used in test scripts. Example:

The file end is a TCL script. It is executed after every test in current group. Usually it checks the results of script work, makes a snap-shot of the viewer and writes TEST COMPLETED to the output. Note: TEST COMPLETED string should be presented in output in order to signal that test is finished without crash. See Creation And Modification Of Tests chapter for more information. Example:

The test group may contain parse.rules file. This file defines patterns used for analysis of the test execution log and deciding the status of the test run. Each line in the file should specify a status (single word), followed by a regular expression delimited by slashes (*/*) that will be matched against lines in the test output log to check if it corresponds to this status. The regular expressions support subset of the Perl re syntax. The rest of the line can contain a comment message which will be added to the test report when this status is detected. Example:

Lines starting with a *#* character and blank lines are ignored to allow comments and spacing. See Interpretation of test results chapter for details.

If a line matches several rules, the first one applies. Rules defined in the grid are checked first, then rules in group, then rules in the test root directory. This allows defining some rules on the grid level with status IGNORE to ignore messages that would otherwise be treated as errors due to the group level rules. Example:

Group folder can have several sub-directories (Grid 1… Grid N) defining test grids. Each test grid directory contains a set of related test cases. The name of directory should correspond to its contents.

Example:

caf basic bugs presentation

Where caf is the name of test group and basic, bugs, presentation, etc are the names of grids.

The file begin is a TCL script. It is executed before every test in current grid. Usually it sets variables specific for the current grid. Example:

The file end is a TCL script. It is executed after every test in current grid. Usually it executes specific sequence of commands common for all tests in the grid. Example:

The grid directory can contain an optional file cases.list defining alternative location of the test cases. This file should contain singe line defining the relative path to collection of test cases.

Example:

../data/simple

This option is used for creation of several grids of tests with the same data files and operations but performed with differing parameters. The common scripts are usually located place in common subdirectory of the test group (data/simple as in example). If cases.list file exists then grid directory should not contain any test cases. The specific parameters and pre- and post-processing commands for the tests execution in this grid should be defined in the begin and end files.

The test case is TCL script which performs some operations using DRAW commands and produces meaningful messages that can be used to check the result for being valid. Example:

The test case can have any name (except reserved names begin, end, data, cases.list, parse.rules). For systematic grids it is usually a capital English letter followed by a number.

Example:

A1

A2

B1

B2

Such naming facilitates compact representation of results of tests execution in tabular format within HTML reports.

The test group may contain subdirectory data. Usually it contains data files used in tests (BREP, IGES, STEP, etc.) and / or test scripts shared by different test grids (in subdirectories, see Grid’s cases.list file chapter).

This section describes how to add new tests and update existing ones.

The new tests are usually added in context of processing some bugs. Such tests in general should be added to group bugs, in the grid corresponding to the affected OCCT functionality. New grids can be added as necessary to contain tests on functionality not yet covered by existing test grids. The test case name in the bugs group should be prefixed by ID of the corresponding issue in Mantis (without leading zeroes). It is recommended to add a suffix providing a hint on the situation being tested. If more than one test is added for a bug, they should be distinguished by suffixes; either meaningful or just ordinal numbers.

Example:

In the case if new test corresponds to functionality for which specific group of tests exists (e.g. group mesh for BRepMesh issues), this test can be added (or moved later by OCC team) to this group.

It is advisable that tests scripts should be made self-contained whenever possible, so as to be usable in environments where data files are not available. For that simple geometric objects and shapes can be created using DRAW commands in the test script itself. If test requires some data file, it should be put to subdirectory data of the test grid. Note that when test is integrated to master branch, OCC team can move data file to data files repository, so as to keep OCCT sources repository clean from big data files. When preparing a test script, try to minimize size of involved data model. For instance, if problem detected on a big shape can be reproduced on a single face extracted from that shape, use only this face in the test.

Test should run commands necessary to perform the operations being tested, in a clean DRAW session. This includes loading necessary functionality by pload command, if this is not done by begin script. The messages produced by commands in standard output should include identifiable messages on the discovered problems if any. Usually the script represents a set of commands that a person would run interactively to perform the operation and see its results, with additional comments to explain what happens. Example:

Make sure that file parse.rules in the grid or group directory contains regular expression to catch possible messages indicating failure of the test. For instance, for catching errors reported by checkshape command relevant grids define a rule to recognize its report by the word Faulty: FAILED /\bFaulty\b/ bad shape For the messages generated in the script the most natural way is to use the word Error in the message. Example:

At the end, the test script should output TEST COMPLETED string to mark successful completion of the script. This is often done by the end script in the grid. When test script requires data file, use Tcl procedure locate_data_file to get path to the data file, rather than explicit path. This will allow easy move of the data file from OCCT repository to the data files repository without a need to update test script. Example:

When test needs to produce some snapshots or other artifacts, use Tcl variable logdir as location where such files should be put. Command testgrid sets this variable to the subdirectory of the results folder corresponding to the grid. Command test sets it to $CASROOT/tmp unless it is already defined. Use Tcl variable casename to prefix all the files produced by the test. This variable is set to the name of the test case. Example:

could produce:

The result of the test is evaluated by checking its output against patterns defined in the files parse.rules of the grid and group. The OCCT test system recognizes five statuses of the test execution:

Other statuses can be specified in the parse.rules files, these will be classified as FAILED. Before integration of the change to OCCT repository, all tests should return either OK or BAD status. The new test created for unsolved problem should return BAD. The new test created for a fixed problem should return FAILED without the fix, and OK with the fix.

If the test produces invalid result at a certain moment then the corresponding bug should be created in the OCCT issue tracker http://tracker.dev.opencascade.org, and the problem should be marked as TODO in the test script. The following statement should be added to such test script:

where:

Note: the platform name is custom for the OCCT test system; it can be consulted as value of environment variable os_type defined in DRAW.

Example:

Parser checks the output of the test and if an output line matches the RegularExpression then it will be assigned a BAD status instead of FAILED. For each output line matching to an error expression a separate TODO line must be added to mark the test as BAD. If not all the TODO statements are found in the test log, the test will be considered as possible improvement. To mark the test as BAD for an incomplete case (when final TEST COMPLETE message is missing) the expression TEST INCOMPLETE should be used instead of regular expression.

Example:

Sometimes it might be necessary to run tests on previous versions of OCCT (up to to 6.5.3) that do not include this test system. This can be done by adding DRAW configuration file DrawAppliInit in the directory which is current by the moment of DRAW startup, to load test commands and define necessary environment. Example (assume that d:/occt contains up-to-date version of OCCT sources with tests, and test data archive is unpacked to d:/test-data):

Note that on older versions of OCCT the tests are run in compatibility mode and not all output of the test command can be captured; this can lead to absence of some error messages (can be reported as improvement).

You can extend the test system by adding your own tests. For that it is necessary to add paths to the directory where these tests are located, and additional data directory(ies), to the environment variables CSF_TestScriptsPath and CSF_TestDataPath. The recommended way for doing this is using DRAW configuration file DrawAppliInit located in the directory which is current by the moment of DRAW startup.

Use Tcl command *_path_separator* to insert platform-dependent separator to the path list. Example: